ETL processes, a pivotal aspect of data management, play a crucial role in ensuring seamless operations and optimal efficiency. Dive into the world of ETL processes with us as we explore its intricacies and significance.

Overview of ETL Processes

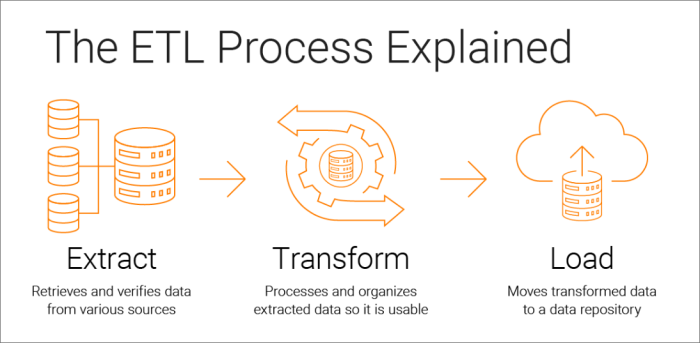

ETL stands for Extract, Transform, Load. It refers to the process of extracting data from various sources, transforming it into a usable format, and loading it into a target database or data warehouse. ETL processes are crucial for ensuring that data is accurate, consistent, and ready for analysis.

Commonly Used ETL Tools, ETL processes

- Talend

- Informatica PowerCenter

- Microsoft SQL Server Integration Services (SSIS)

- Apache Nifi

These tools help automate the ETL process, making it more efficient and less prone to errors.

Importance of ETL Processes in Data Management

ETL processes play a vital role in data management for several reasons:

- Data Integration: ETL processes allow for the integration of data from multiple sources into a unified format, enabling better analysis and decision-making.

- Data Quality: By transforming and cleaning data during the ETL process, organizations can ensure that the data is accurate, complete, and consistent.

- Efficiency: Automating ETL processes saves time and resources, making data management more efficient and cost-effective.

- Scalability: ETL processes can handle large volumes of data, making them essential for organizations dealing with big data.

Extract Phase

The extract phase in ETL processes involves retrieving data from various sources to be used in the transformation and loading stages. This initial step is crucial for ensuring that relevant data is gathered efficiently.

Methods for Data Extraction

- Full Extraction: Involves extracting all data from the source systems, which can be time-consuming and resource-intensive.

- Incremental Extraction: Only extracts data that has been modified or added since the last extraction, reducing processing time and resources.

- Change Data Capture (CDC): Captures only changed data from the source systems, minimizing the amount of data transferred and processed.

Challenges in the Extraction Process

- Data Volume: Dealing with large volumes of data during extraction can lead to performance issues and increased processing time.

- Data Quality: Ensuring the accuracy and completeness of extracted data can be challenging, especially when dealing with multiple source systems.

- Real-time Extraction: Extracting data in real-time to keep up with constantly changing data sources can be complex and resource-intensive.

- Security Concerns: Safeguarding sensitive data during the extraction process to prevent unauthorized access or data breaches is a critical challenge.

Transform Phase

The transformation phase is a crucial step in the ETL process where the extracted data is cleaned, formatted, and restructured to fit the desired target schema. This phase ensures that the data is accurate, consistent, and reliable for analysis and reporting purposes.

Examples of Data Transformations

- Changing data types: Converting strings to dates, integers, or decimals for better analysis.

- Normalization: Splitting data into multiple tables to reduce redundancy and improve data integrity.

- Aggregation: Summarizing data to provide insights at a higher level of abstraction.

- Derivation: Creating new columns or variables based on existing data for further analysis.

Data Quality Assurance in Transformation

During the transformation phase, data quality is ensured through various methods to maintain the integrity and accuracy of the data. This includes:

- Data profiling: Analyzing the data to understand its structure, patterns, and anomalies.

- Data cleansing: Removing or correcting inconsistencies, duplicates, and errors in the data.

- Data validation: Verifying that the data meets specific criteria or constraints set for quality assurance.

- Data enrichment: Enhancing the data with additional information from external sources to improve its quality and context.

Load Phase

The load phase in ETL processes involves the final step of loading the transformed data into the target destination, such as a data warehouse, database, or data lake. This phase focuses on efficiently loading the data while ensuring data integrity and consistency.

Types of Loading Processes

- Full Load: This process involves loading all the data from the source into the target system. It is suitable for smaller datasets or when the entire dataset needs to be refreshed.

- Incremental Load: In this process, only the new or updated data since the last load is extracted and loaded into the target system. This is more efficient for large datasets and reduces processing time.

- Append Load: Data is appended to the existing dataset in the target system without overwriting or replacing any existing data. This is useful for scenarios where historical data needs to be preserved.

- Merge Load: This process involves merging new data with existing data based on specific criteria, such as keys or attributes. It helps in updating existing records and inserting new ones efficiently.

Best Practices for Data Loading

- Optimize Data Structures: Design tables and indexes in the target system to optimize data loading performance.

- Use Bulk Loading: Utilize bulk loading techniques provided by database systems to load data in large batches, reducing processing time and improving performance.

- Data Validation: Implement data validation checks during the loading process to ensure data quality and integrity before it is stored in the target system.

- Error Handling: Develop robust error handling mechanisms to address issues during data loading, such as data format errors, duplicate records, or data integrity violations.

- Logging and Monitoring: Implement logging and monitoring mechanisms to track the progress of data loading processes, identify bottlenecks, and troubleshoot any issues that may arise.

In conclusion, ETL processes serve as the backbone of efficient data management, facilitating smooth operations and data integrity. With a clear understanding of the extract, transform, and load phases, organizations can enhance their data processes and decision-making capabilities.

When looking for the top 5G network providers for 2024, it’s essential to consider factors like coverage, speed, and reliability. This comprehensive guide on Top 5G Network Providers can help you make an informed decision for your connectivity needs.

The integration of Internet of Things (IoT) in healthcare is revolutionizing the industry, from remote patient monitoring to predictive analytics. Learn more about the impact of IoT in healthcare in this informative article.

Autonomous vehicles are the future of transportation, with advancements in AI and connectivity shaping the road ahead. Dive into a comprehensive guide on the Future of Autonomous Vehicles to explore the possibilities and challenges of self-driving cars.