Big data integration is key in today’s data-driven world, tackling challenges and reaping benefits. Dive into this essential topic with real-world examples and best practices for seamless integration.

Overview of Big Data Integration

Big Data Integration refers to the process of combining and analyzing large volumes of data from various sources to gain valuable insights and make informed decisions. In today’s data-driven world, organizations are collecting vast amounts of data from different channels, such as social media, IoT devices, and customer interactions. The significance of big data integration lies in its ability to help organizations streamline their data management processes, improve decision-making, and drive innovation.

Challenges in Big Data Integration

- Integration of diverse data sources: Organizations often struggle with integrating data from multiple sources, each with different formats, structures, and quality.

- Data security and privacy concerns: Ensuring the security and privacy of data while integrating and processing large volumes of information is a major challenge.

- Scalability and performance issues: Handling the massive volume, velocity, and variety of data in real-time poses challenges in terms of scalability and performance.

- Data governance and compliance: Maintaining data integrity, quality, and compliance with regulations while integrating big data is a complex task.

Benefits of Efficient Big Data Integration Strategies

- Improved decision-making: By integrating data from various sources, organizations can gain a comprehensive view of their operations and customers, leading to more informed decision-making.

- Enhanced operational efficiency: Efficient big data integration allows for streamlined data processes, automation of tasks, and quicker access to insights, improving operational efficiency.

- Increased competitiveness: Organizations that effectively integrate big data can leverage insights to drive innovation, enhance customer experiences, and stay ahead of competitors.

- Cost savings: Optimizing data integration processes can reduce operational costs, minimize data redundancy, and improve resource allocation, leading to cost savings.

Technologies for Big Data Integration

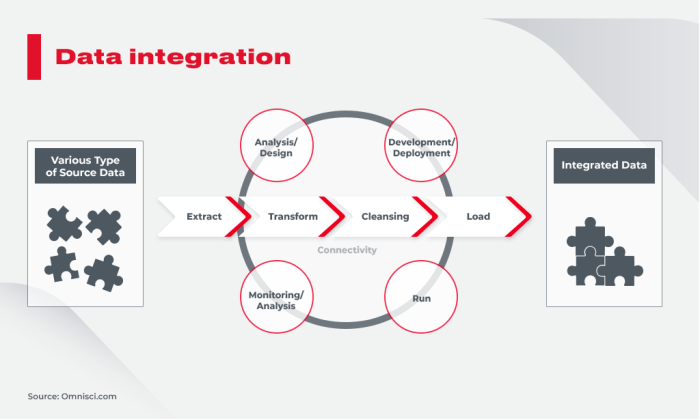

Big data integration involves the process of combining and managing large volumes of data from various sources to enable meaningful analysis and decision-making. To achieve this, organizations rely on a range of technologies designed to streamline data integration processes, improve data quality, and enhance overall efficiency.

ETL Tools

ETL (Extract, Transform, Load) tools are widely used in big data integration to extract data from multiple sources, transform it into a consistent format, and load it into a target data warehouse or repository. These tools automate the process of data integration, making it easier to handle large volumes of data efficiently. Popular ETL tools include Informatica, Talend, and Apache Nifi.

Data Virtualization

Data virtualization enables organizations to access and integrate data from various sources without physically moving the data. This technology creates a virtual layer that allows users to query and analyze data in real-time, regardless of its location. Data virtualization tools such as Denodo and Cisco Data Virtualization help organizations achieve a unified view of their data without the need for complex data movement.

Apache Kafka

Apache Kafka is a distributed streaming platform that is commonly used for real-time data integration and processing. It allows organizations to ingest, store, and process large volumes of data in real-time, making it ideal for scenarios that require low latency and high throughput. Companies like LinkedIn and Uber have successfully leveraged Apache Kafka for real-time data integration and analytics.

Apache Spark

Apache Spark is a powerful open-source framework for big data processing and analytics. It provides a unified platform for batch processing, real-time stream processing, machine learning, and graph processing, making it a versatile tool for big data integration. Organizations like Netflix and Airbnb use Apache Spark to process and analyze large datasets efficiently.

Amazon Web Services (AWS) Glue

AWS Glue is a fully managed extract, transform, and load (ETL) service provided by Amazon Web Services. It simplifies the process of building and managing ETL workflows for big data integration on the cloud. With features like data cataloging, job scheduling, and serverless execution, AWS Glue enables organizations to automate and scale their data integration processes effectively.

Best Practices for Big Data Integration

When dealing with large volumes of data, it is crucial to follow best practices to ensure seamless integration. Data governance, data quality, and metadata management all play a significant role in the success of big data integration processes.

Data Governance and Data Quality

Data governance involves establishing processes and policies to ensure that data meets certain standards of quality, integrity, and security. It is essential to have a clear framework in place to manage data effectively throughout the integration process. Data quality, on the other hand, focuses on the accuracy, completeness, and consistency of data. By maintaining high data quality standards, organizations can avoid errors and ensure that the integrated data is reliable for analysis and decision-making.

- Establish data governance policies and procedures to maintain data integrity.

- Implement data quality checks and validations to ensure the accuracy of integrated data.

- Regularly monitor and audit data to identify and resolve quality issues promptly.

Metadata Management

Metadata management involves defining and managing the data structures, relationships, and attributes that describe the integrated data. It provides essential information about data sources, formats, and transformations, helping organizations understand and utilize the integrated data effectively. Proper metadata management is crucial for maintaining data lineage, tracking changes, and ensuring data consistency across the integration process.

- Document metadata definitions and standards to facilitate data understanding and usage.

- Implement metadata repositories or catalogs to centralize metadata storage and access.

- Ensure metadata consistency and accuracy to support data integration and analysis.

Big Data Integration Challenges

Integrating diverse big data sources poses several challenges for organizations, ranging from technical issues to data security concerns. Overcoming these challenges is essential for successful big data integration.

Data Silos

Data silos are a common challenge in big data integration, where data is isolated in separate systems or departments, making it difficult to access and analyze. To address this challenge, organizations can implement data integration tools and platforms that enable seamless data sharing and collaboration across different sources.

Data Inconsistency, Big data integration

Data inconsistency, such as conflicting or duplicate information, can hinder the accuracy and reliability of big data integration. Organizations can establish data governance policies and procedures to ensure data quality and consistency across all integrated sources.

Data Latency

Data latency, the delay in data processing and analysis, can impact decision-making and real-time insights in big data integration. To overcome this challenge, organizations can leverage technologies like in-memory computing and real-time data processing to reduce latency and improve data processing speed.

Data Security and Privacy

Ensuring data security and privacy is crucial in big data integration, especially when dealing with sensitive or confidential information. Organizations can implement encryption, access controls, and data anonymization techniques to protect data from unauthorized access and breaches.

In conclusion, mastering big data integration is crucial for organizations to overcome challenges and unlock the full potential of their data. Implementing best practices and leveraging the right technologies can lead to successful integration and data utilization.

When looking for the top 5G network providers in 2024, it’s essential to have a comprehensive guide to make an informed decision. You can explore the latest offerings and coverage from industry leaders in the field. Check out this detailed resource on the Top 5G Network Providers: A Comprehensive Guide for 2024 for more insights.

The integration of Internet of Things (IoT) in healthcare is revolutionizing the industry, providing innovative solutions for patient care and management. Learn more about the impact of IoT in healthcare by exploring this informative guide on Internet of Things (IoT) in healthcare for a deeper understanding.

As we look towards the future, autonomous vehicles are set to transform the way we travel and commute. Get a comprehensive guide to the road ahead with insights on the latest technology and trends in autonomous vehicles. Dive into the details on the Future of Autonomous Vehicles: A Comprehensive Guide to the Road Ahead for a glimpse into the future of transportation.